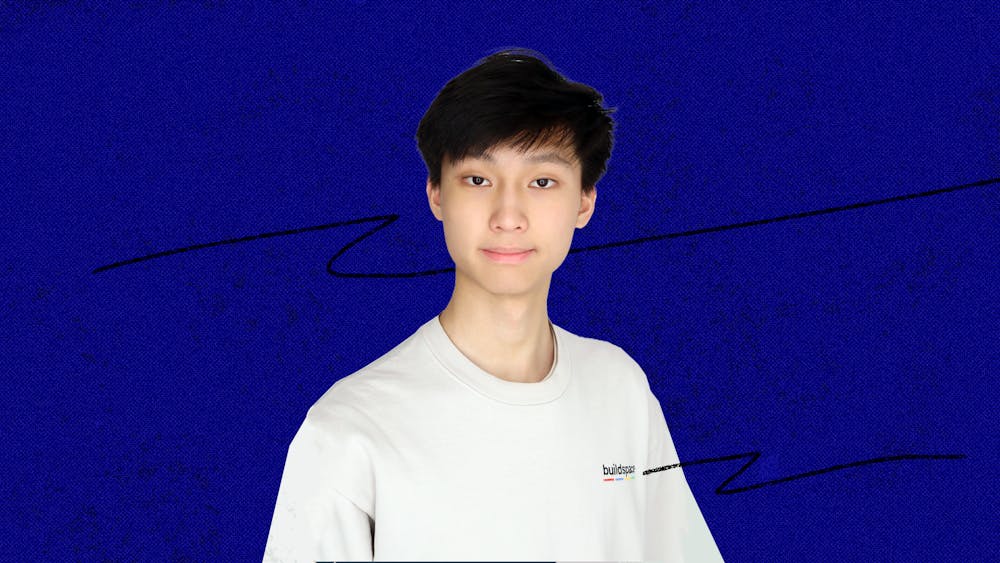

"We're trying to grow brains, literal human brains on a chip." It's the kind of idea that's so wild, so out there, you'd expect it from a sci–fi novel rather than a college first year. Yet, Maxx Yung (E ‘27), the self–assured founder of Nanoneuro Systems, makes the audacious seem not just doable but like the next logical step in the evolution of computing. Their infectious enthusiasm and unwavering confidence don't just pique curiosity; they trigger that "why didn't I think of that" moment, when, let's be honest, none of us were remotely close to envisioning, let alone actively working toward, a future where artificial intelligence is powered by actual brains.

Maxx recalls two seemingly unrelated life experiences that ignited their commitment to revolutionizing energy efficiency and sustainable AI. The initial catalyst occurred during their second visit to Australia's Great Barrier Reef, where the vibrant coral oasis they had witnessed less than a decade earlier had transformed into a desolate expanse of stark gray.

Another pivotal experience was the loss of their grandmother to Alzheimer's disease, which spurred Maxx into six years of dedicated brain research. From seventh to 12th grade, Maxx did research on the intricacies of neuronal memory, exploring how the brain stores and processes information. During a research presentation at Stanford, a professor approached Maxx and initiated a discussion about mathematical models of neurons, likening them to electrical components such as transistors and capacitors used in engineering. This conversation sparked the idea for Maxx's project, as they realized the potential to bridge their knowledge of memory with computational principles in electrical engineering. Nanoneuro Systems was born from the convergence of their expertise in memory and the prospect of translating it into an electrical engineering model.

Maxx broke down the crux of their mission with a clarity that actually resonates: "Sometimes you may notice your computer heats up, right? That's because the silicon chips we all use are highly energy inefficient." This inefficiency has major consequences for the future of AI.

With the recent surge in AI, we are grappling with monumental energy consumption and the colossal computing power essential for training and deploying these advanced AI models. The scale of this challenge becomes stark when considering that data centers currently command two percent of the world's global electricity usage, projected to catapult to an astounding seven percent by 2027. Maxx and AI experts alike predict that we're hurtling towards a future where the resources required to sustain AI demand might, quite literally, run dry.

According to Maxx, there's three–fold approach to combat the escalating energy demands of AI. The first strategy involves optimizing the efficiency of training AI models, a path treaded by tech giants like Microsoft and Amazon. The second centers on bolstering energy sources, exemplified by Microsoft's groundbreaking plan to construct nuclear fusion reactors alongside each of their data centers.

Maxx seeks to address the third solution, which revolves around the lack of progress in computing efficiency among competitors and the antiquated state of current computer architecture. A relic dating back to the 1950s or even the 1940s, this outdated framework has spurred Maxx’s advocacy for the emergence of a new model aligned with the demands of contemporary technology. It is within this context that Nanoneuro Systems emerged, drawing inspiration from biological models, notably the remarkably efficient human brain.

"You can probably eat one hamburger, and your brain can function for a whole day, right? So the brain is incredibly energy–efficient," explains Maxx. This efficiency, they point out, stands in stark contrast to traditional computing models that demand tens of thousands of datasets for comparable tasks.

It is this observation that fuels Maxx's ambitious vision—the cultivation of human brain neurons on a chip to serve as the computational powerhouse for future AI models. Instead of relying on traditional silicon chips like those manufactured by Nvidia for training AI models, this approach utilizes actual brain tissue. The process involves growing brain cells or “small brains” on the chip, enabling them to send and receive signals. Inputs can be sent into the brain, and outputs can be received from it, suggesting a biologically–based computing paradigm.

“When I came to Penn, I thought I’d start this idea in four to five years, once I had the capability to do so. But when I got here I discovered the Nanotechnology Center where I could actually fabricate my own chips, and so I decided to just start," Maxx said.

Throughout October 2023, Maxx went to the Singh Center for Nanotechnology in all of their free time. Maxx is so passionate about their computer chips that sometimes school falls by the wayside. “Yeah, I mean, I'm just gonna be honest, I don't go to class. I learn the material outside of class, and I think I'm fine. So as long as I maintain good grades, then I spend my time researching more about this startup.”

Working alone in their spare time, Maxx has come remarkably far on their venture. While they predict that a commercially viable solution is five to ten years out, they have completed a basic prototype. When seeking collaboration and assistance from fellow researchers they are often met with skepticism. "My idea, admittedly, does sound impossible," Maxx acknowledged. Consequently, many professors opt to decline collaboration, citing busy schedules. "It's a challenging landscape, compounded by a critical issue: funding," they expressed candidly.

“I would say that a lot of people when they hear my idea, they must assume that I'm super smart, or like an outlier. And I don't think that's the case. I think a lot of it is luck, but also a lot of it is just a little bit of progress every single day. And so I don't want to discourage anyone, says Maxx. "I want to encourage everyone that you can do your projects, even some wild science fiction fantasy.”

Editor's note: A previous version of this article included a misspelling of the source's last name and referred to them with the wrong pronouns. The article has also been updated to reflect the source's financial situation more accurately. Street regrets these errors.